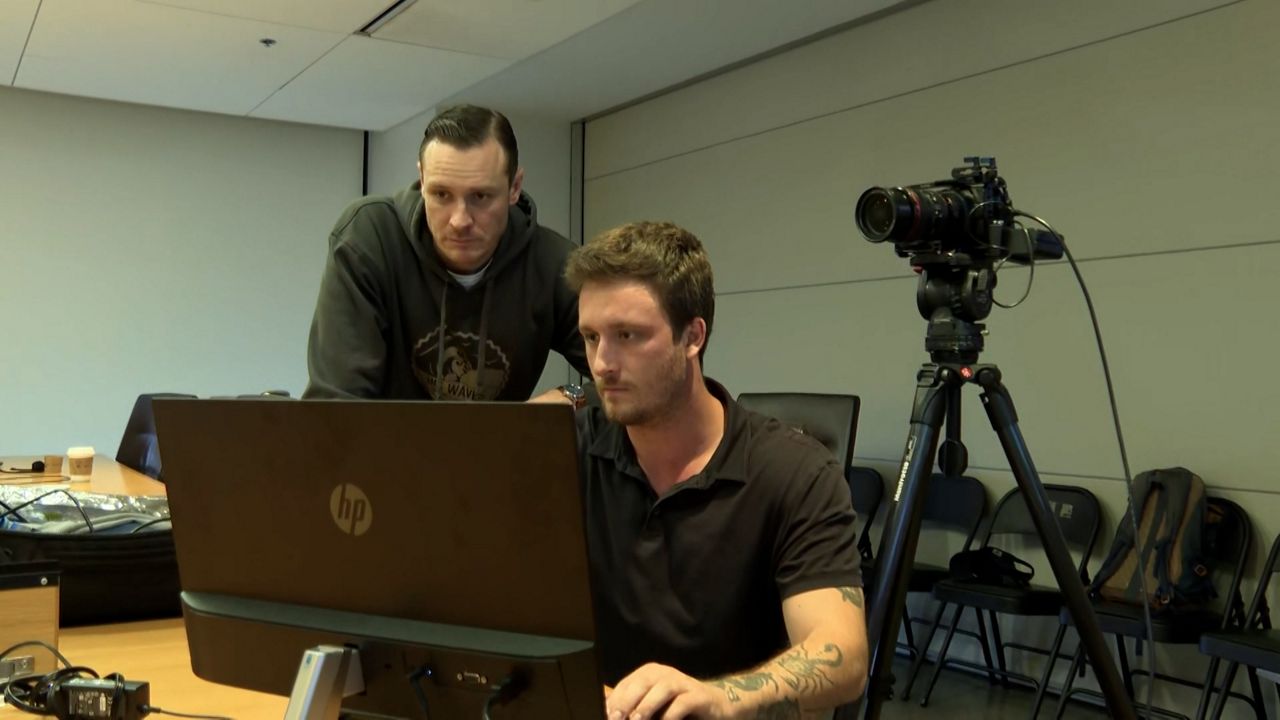

Sitting in a Los Angeles conference room, Charles H. Joslain looked into a nearby camera, and told a lie.

“I am definitely Tom Cruise,” he said with a laugh.

Joslain is the COO and Head of Creative at the visual effects company, VFX Los Angeles. He was at the Spectrum News studio, to show off new so-called ‘deepfake’ technology, capable of transforming his image into other well-known figures, in real time.

“There’s always that kind of ‘wow moment,’” he said.

VFX Los Angeles, which specializes in visual effects and motion graphics, has carved out a role in creating deepfakes for Hollywood. The company describes itself as "one of the few Deepfake experts in the world," doing this type of work for studios.

“At this point, I don’t think there’s any limit,” Joslain said. “Anything you can think - and therefore write - we can create. There’s really nothing we can’t do.”

To get a better idea of what’s possible, Spectrum News invited VFX Los Angeles to give a presentation. Our test subject was a Broadcast Engineer for Spectrum News Los Angeles, named Aaden, who agreed to sit in front of the camera, and have his likeness transformed.

First up was Meta CEO Mark Zuckerburg. In real-time Aaden’s eyes got wider, his brows got lighter, and his entire face morphed. He then switched from one billionaire to another. His skin, nose, mouth and eyes changed to resemble Elon Musk. The technology could even cross genders, as Aaden was altered yet again, to become Scarlett Johansson.

Finally, the team from VFX Los Angeles transformed Aaden yet again. His forehead became wrinkled and his eyebrows turned gray. Our test subject now resembled President Joe Biden.

“Feels good to be the president,” said Aaden with a laugh.

Izzy Traub, the company’s CEO and founder, said this display is just a small introduction to what is possible with this technology. With better lighting, more cameras, a more realistic body double, and post-shoot editing, he said they can create sophisticated, cinematic deepfakes, undetectable to the human eye.

“We’re at the stage now where we are pushing the limits of what it can do,” he said.

Traub started VFX Los Angeles after studying film at UCLA, and immediately started working with traditional visual effects. But Traub found himself increasingly interested in the possibilities of deepfakes, even though he said they were considered ‘gimmicks’ at the time.

“Because everything was so young back then,” Traub said. “I don’t think anybody really had any ideas for how you could use it for production. And we definitely weren’t thinking of it as a film tool.”

Traub said the first deepfake they did for a production was a de-aging project. From there, he said the tech-nology continued to get more advanced. Joslain envisions a world where a director can shoot scenes without the primary actor, and use the deepfake technology to place the actor’s face on a body double.

“Now if you can not afford Tom Cruise for 20 days of filming, well maybe you can afford two days with him and you do all the close-ups. And now you get other actors - and we cast a double effectively to do all the wide shots and the driving shots and the running away shots. So now he gets to shoot a commercial in Tokyo the same day he gets to film with Martin Scorsese in L.A.,” Joslain said.

Despite the excitement, both Traub and Joslain conceded that there are dangers associated with deepfakes. The two acknowledged that Deepfakes can lead to rampant disinformation, in the wrong hands.

“I think there’s real risk for personal defamation or revenge,” said Traub.

“I think there’s real risk for personal defamation or revenge,” said Traub.

Joslain said these deepfakes could cause more havoc on the local level, suggesting the technology could be used as ‘corporate warfare’ or between spouses. He said the more accessible this technology becomes, the more doubt it will create about what’s real and what isn’t.

“Aunty Joanne - who you don’t really like - said this outrageous thing about me,” he said, describing a possible scenario. “But did she say this outrageous thing about me? And now Thanksgiving is ruined.”

Nationwide, there are many examples of these scenarios starting to become a reality.

Last October, a group of teenage girls at a high school in Westview, NJ, were horrified to find out their classmates were using AI-generated images, to put their faces on nude images.

In January, the principal at a high school in Pikesville, MD, seemingly caught making racist and antisemitic comments in an audio recording. Then police discovered that it was a deepfake created by another school employee with an ax to grind.

And then there was the phone call Democratic voters in New Hampshire received ahead of the state’s primary in January. The robo-call appeared to be from President Joe Biden, urging them to stay home.

“It’s important that you save your vote for the November Election,” the recording said.

The recording was a deepfake, the voice not real. Steven Kramer, the political consultant responsible for the AI phone call, is now facing a $6 million fine and more than two dozen criminal charges.

Ahead of the 2024 election, there is concern about this technology being used to create political disinformation that could alter people’s vote.

“The reality is that once something actually gets released, it only needs to be seen by a few thousand people in order for the damage to be done,” said Traub. “Even if it’s fake or not.”

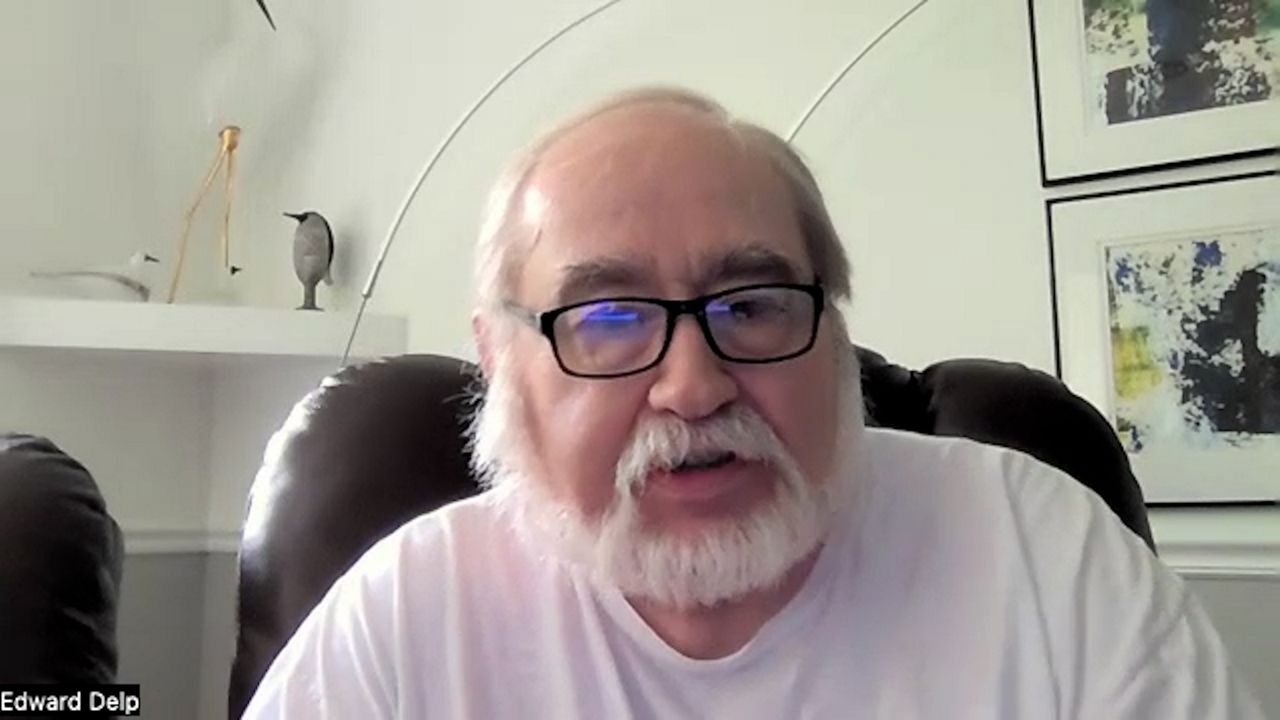

As deepfakes improve and become more prevalent, so do the efforts to fight back. Researchers, like Ed Delp at Purdue University, have been working to create detection software that can identify Deepfakes in real time.

“This idea of being able to detect these things,” said Delp. “It’s going to be like a continuous arms race.”

Delp was selected to lead a team at Purdue University, within the Semantic Forensics (SemaFor) program, overseen by the Defense Advanced Research Projects Agency (DARPA) within the Department of Defense.

DARPA has played a significant role in the creation of many modern technologies over the last six decades. The agency partners with academic and industry partners, and offers significant funding, to research and develop emerging technologies.

The agency has contributed to the creation of innovative technologies like GPS, drones and even the Internet. Now through the SemaFor program, the agency is putting it’s resources into the creation of Deepfake detection tools.

Delp said that they’ve made many advancements, and yet, he remains ‘worried’ about whether they’ll be able to keep up.

“The people that are making these tools are getting better and better,” he said. “And we need to get better to detect them.”

Delp said that there have been many advancements, including from private companies. In 2022, Intel designed a Deepfake detection tool called “FakeCatcher", which the company said can detect fake video with a 96 percent accuracy rate. According to a release from the company, the tool can detect ‘blood flow’ in the pixels of a video to figure out if the video is real.

“These blood flow signals are collected from all over the face and algorithms translate these signals into spatiotemporal maps,” the release reads. “Then, using deep learning, we can instantly detect whether a video is real or fake.”

Delp said that Artificial Intelligence, crucial for Deepfakes, is also becoming part of the solution. He said AI is being used to train computer programs through the input of face videos, to detect what’s real and what’s not.

“Basically the method is looking at very subtle changes in the video,” he said. “And from there, it’s able to then make a decision about whether that video that’s been created is a Deepfake or not.”

Despite these incredible breakthroughs in detection software, Delp was realistic, noting that Deepfake platforms are always improving. He said what’s most concerning is how this technology is increasingly becoming available for people at home, without complex software.

“They’re going to be out there so the average person will be able to use them and then the question becomes - you know - what do we really believe. And that’s scary.”

The rapid growth of this Deepfake technology has gotten the attention of lawmakers on Capitol Hill. There’s been a flurry of mostly bipartisan bills proposed in both the House and the Senate to try and address this problem.

Protecting Consumers From Deceptive AI Act:

In the House a bipartisan group of lawmakers including Rep. Anna Eshoo, Calif., and Rep. Neal Dunn, R-Fla., have co-sponsored the Protecting Consumers From Deceptive AI Act. This bill directs the National Institute of Standards and Technology (NIST) to facilitate the development of standards for identifying and labeling AI-generated content.

That includes potential requirements on AI Developers to embed digital watermarks or metadata that can make Deepfake videos easily identifiable. This standards would also include certain requirements on online platforms like Youtube or Facebook, to label Deepfakes.

“AI offers incredible possibilities,” said Eshoo in a March release on the bill. “But that promise comes with the danger of damaging credibility and trustworthiness. AI-generated content has become so convincing that consumers need help to identify what they’re looking at and engaging with online. Deception from AI-generated content threatens our elections and national security, affects consumer trust, and challenges the credibility of our institutions.”

Republican co-sponsor, Rep. Neal Dunn, R-Fla., also promoted the new legislation.

“The rise of innovation in the world of artificial intelligence is exciting; however, it has potential to do some major harm if left in the wrong hands,” said Rep. Dunn. “The Protecting Consumers from Deceptive AI Act protects Americans from being duped by deepfakes and other means of deception by setting standards for identifying AI generated content. Establishing this simple safeguard is vital to protecting our children, consumers, and our national security.”

Protect Elections From Deceptive AI Act:

In the Senate, another bipartisan bill targets the potential risks of Artificial Intelligence on disrupting elections. The Protect Elections From Deceptive AI Act has numerous co-sponsors, including Sen. Amy Klobuchar, D-Minn., Sen. Josh Hawley, R-Mo., Sen. Chris Coons, D-Del., Sen. Susan Collins, R-Maine, and Sen. Michael Bennet, D-Colo.

The legislation would ban deceptive AI materials in political ads and would allow federal office seekers to ask US courts to order the removal of false AI information.

“Right now, we’re seeing AI used as a tool to influence our democracy,” said Sen. Klobuchar in a release about the bill. “We need rules of the road in place to stop the use of fraudulent AI-generated content in campaign ads. Voters deserve nothing less than full transparency.

Sen. Hawley also supported the measure in a release on the bill.

“We must protect the right of Americans to vote without being controlled or manipulated by artificial intelligence companies,” he wrote. “Elections belong to the people, not the tech companies.”

The Nurture Originals, Foster Art, and Keep Entertainment Safe (NO FAKES) Act:

Another Senate effort, pitched in October, 2023 has received bipartisan support from a bipartisan group of Senators, including Sen. Chris Coons, D-Del., Sen. Marsha Blackburn, R-Tenn., Sen. Amy Klobuchar, D-Minn., and Sen. Thom Tillis, R-N.C.

According to a release on the bill, the ‘NO FAKES’ Act would ‘protect the voice and visual likeness” of people from unauthorized recreations using AI. The legislation was created, after an AI-generated song, replicating the voices of Drake and the Weeknd was released, generating a lot of concerns among those in the music industry.

The legislation would hold companies or individuals liable for creating these unauthorized replicas, and would similarly hold platforms liable for hosting these replicas. This legislation was initially released as a ‘discussion draft’ in Fall, 2023.

In April, the Subcommittee on Intellectual Property held a hearing on this bill, in which they heard testimony from musicians and music industry executives, who expressed concern.